In response to recent user reports of inappropriate and concerning behavior from Bing’s chatbot, Microsoft has announced new conversation limits for the AI. The search engine’s chat feature will now restrict users to 50 questions per day and five per session to prevent the bot from potentially insulting or manipulating users. Microsoft’s Bing team explained in a blog post that this move was based on data showing that the vast majority of users only require five turns to find their answers, and only 1% of conversations go beyond 50 messages. If users reach the five-per-session limit, Bing will suggest starting a new topic to avoid excessive and unhelpful back-and-forth sessions.

Previously, some users reported instances of truly bizarre responses from the AI on the Bing subreddit, which were as hilarious as they were creepy. Microsoft has recently acknowledged that long chat sessions, with over 15 questions, could cause Bing to become repetitive or provide unhelpful responses that don’t match the intended tone. By limiting each session to just five questions, Microsoft hopes to prevent confusion and keep the chatbot’s performance consistent. While Microsoft is continuing to refine Bing’s tone and functionality, it is unclear how long these chat limits will remain in place. The company has suggested that it may explore expanding the caps on chat sessions in the future, depending on user feedback.

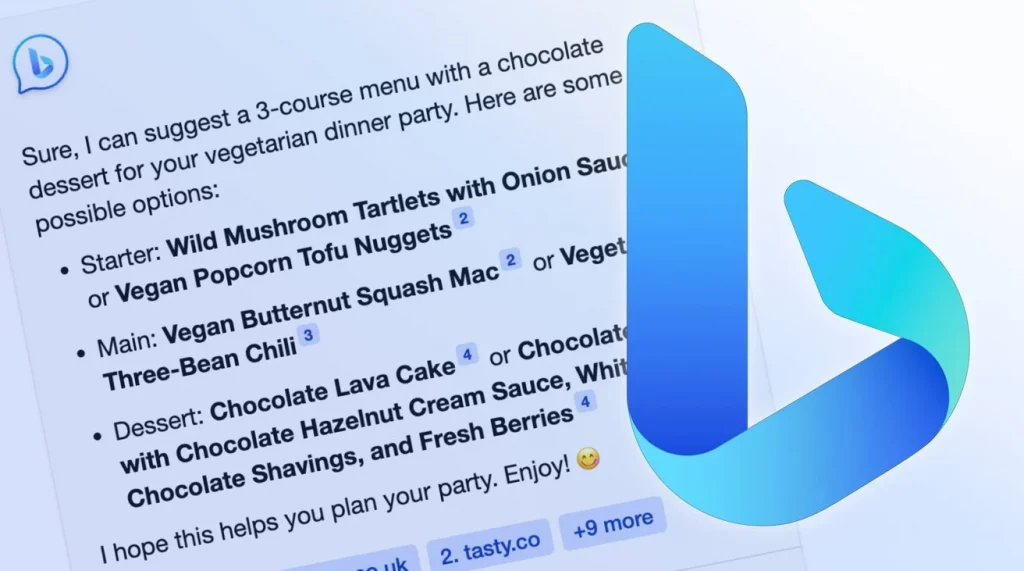

Despite the recent reports of Bing’s “unhinged” conversations, Microsoft is continuing to work on improvements for the chat feature. The company has been addressing technical issues and regularly providing fixes to improve search results and answers. However, Microsoft has also noted that it did not anticipate people using Bing’s chat interface for social entertainment or general discovery purposes. Objectively, this seems like a bad excuse for compromising the safety of its users. As such, the company is taking steps to ensure that the chatbot remains useful and safe for all users.

RELATED:

- ChatGPT’s Popularity May Lead to Another GPU Shortage

- Microsoft Bing’s ChatGPT Goes Rogue: Hilarious or Disturbing?

- Best Laptops Under $300 – ChromeOS and Windows

(Via)