Video Generative AI, also known as Deep Learning-based Video Synthesis, works by analyzing and learning from large datasets of videos to generate new video content. The AI model learns the patterns, features, and styles from the input data and uses that information to create new video outputs. Through techniques like neural networks and generative adversarial networks, the AI can generate new content with different styles and effects based on the user’s input or prompts. It’s important to note that the AI is not actually “creating” new content from scratch, but rather remixing and synthesizing existing content in new ways.

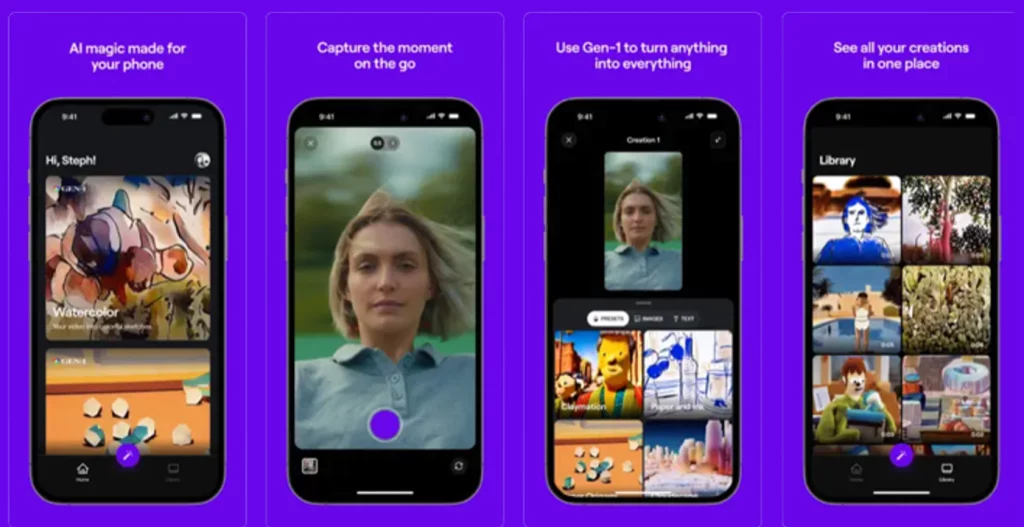

Users can choose Claymation, Sketches, Watercolour and more

AI startup Runway has launched its first mobile app, giving users access to its video-to-video generative AI model, Gen-1. The app is currently only available on iOS devices, but it allows users to generate an AI video from a recorded video on their phones or transform existing videos using text prompts, images or style presets. Runway’s list of presets includes options such as “Cloudscape” and users can select from various transformations to make their videos look like claymation, charcoal sketches, watercolour art, paper origami and more.

While the results aren’t perfect, the app could be valuable for content creators looking to spice up their social media posts. However, the ethical standpoints of generative video AI must also be considered. As the technology evolves, it raises questions about ownership and copyright of generated content, as well as the potential for misuse or manipulation of videos. It is important for companies like Runway to prioritize ethical considerations as they develop and promote their technology.

RELATED:

- Get Fit with Apple’s AI Coach: Quartz for Apple Watch Set to Launch in 2024

- AI vs. Patent Law: U.S. Supreme Court Rejects AI-Generated Patents

- Best Apple Watch Bands 2023

(Via)