In a groundbreaking move, Microsoft has unveiled its latest arsenal in the tech race, introducing two custom-designed chips, Azure Maia AI Accelerator and Azure Cobalt CPU. These chips, the result of years of covert refinement in a Silicon lab on Microsoft’s Redmond campus, are set to revolutionize the landscape of AI services and cloud computing.

Maia AI Accelerator

The Maia chip is tailored to accelerate AI computing tasks, a direct response to the burgeoning costs associated with delivering AI services. Specifically designed to power Microsoft’s $30-a-month “Copilot” service, the Maia chip is optimized for running large language models, including those integral to Microsoft’s Azure OpenAI service. By routing most AI efforts through common foundational AI models, Microsoft aims to drastically improve efficiency and tackle the challenge of high AI service costs.

Azure Cobalt CPU

Complementing Maia is the Cobalt chip, a central processing unit (CPU) strategically designed to compete with Amazon Web Services’ Graviton series of in-house chips. Utilizing technology from Arm Holdings, Cobalt has undergone rigorous testing, proving its mettle by powering Teams, Microsoft’s business messaging tool. Unlike its counterparts, Cobalt will not be restricted for internal use; Microsoft plans to sell direct access to Cobalt, presenting a competitive alternative to AWS’s chips.

Acknowledging the stiff competition with Amazon Web Services, Microsoft is keen on emphasizing the competitive performance and price-to-performance ratio of its Cobalt chip. This strategic move aligns with Microsoft’s overarching strategy, which revolves around designing chips to enhance efficiency, reduce costs, and ultimately offer superior solutions to customers.

Technical details reveal that both Maia and Cobalt chips are manufactured using cutting-edge 5-nanometer technology from TSMC. The Maia chip, in a cost-effective twist, employs standard Ethernet network cabling, eschewing the more expensive custom Nvidia networking technology used in OpenAI’s supercomputers.

Analysts view Microsoft’s approach with the Maia chip as a clever maneuver, allowing the company to capitalize on selling AI services in the cloud until personal devices become powerful enough. AWS’s Graviton chip, reportedly boasting 50,000 customers, underscores the fierce competition in the AI chip market, with AWS emphasizing ongoing innovation in delivering future chip generations.

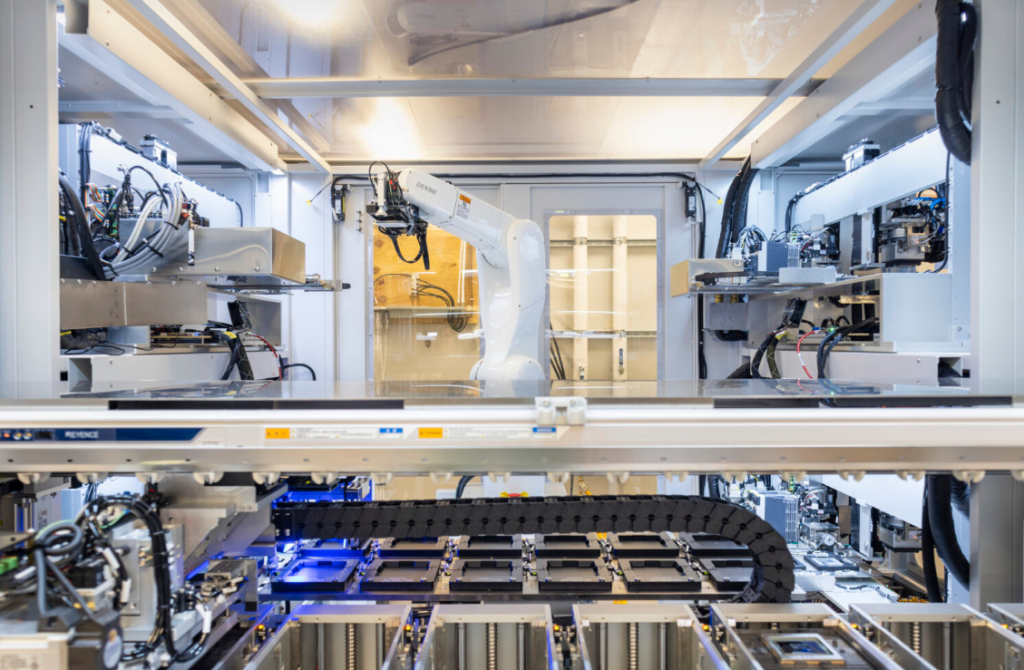

The unveiling of Maia and Cobalt chips marks a significant step in Microsoft’s quest for efficient, scalable, and sustainable computing power. These chips not only address the demands of AI workloads but also play a crucial role in optimizing infrastructure systems from silicon to servers for both internal and customer workloads.

As the tech giant sets its sights on designing second-generation versions of Maia and Cobalt, the future promises continued optimization across every layer of the technological stack for enhanced performance, power efficiency, and cost-effectiveness. Microsoft’s venture into custom-designed chips is poised to reshape the AI landscape and propel the industry toward new horizons.

Related:

- Nvidia, world’s premier AI chip maker, skyrockets to global dominance and record-breaking heights

- Microsoft appears to quietly close Windows 10 Mobile App Store

- OpenAI is Putting a Hold on New Sign-ups for ChatGPT Plus

(via)