Oppo, the Chinese smartphone vendor, has showcased a few of its new camera technologies at the Computer Vision and Pattern Recognition (CVPR) 2020. In the event, the company earned first place twice and third place twice awards at the conference.

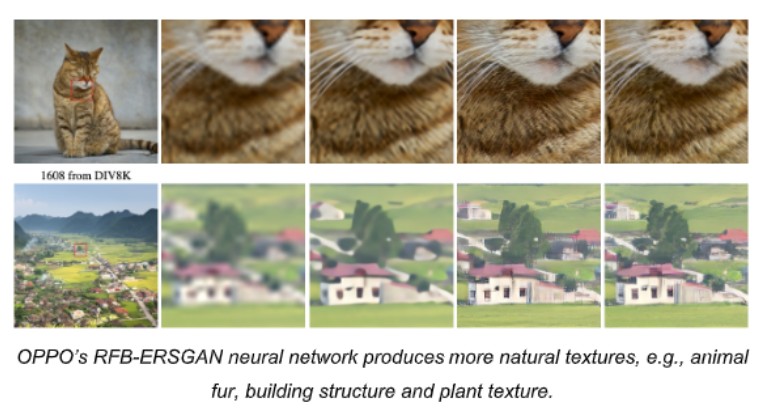

The technology that got Oppo the awards includes perceptual extreme super-resolution technology, visual localization, and human activity recognition in videos. Oppo’s Research team was tasked to solve the issues with perceptual extreme super-resolution for a single image with a 16x magnification in the event. The goal of this challenge was to develop an AI model that is capable of producing high resolution results with the best perceptual quality and similarity to the actual subject.

In this event, Oppo’s team showcased the effectiveness of RFB-ERSGAN neural network, which beat the other 280 other participants. To expain this technology in layman’s terms, it can be used in smartphones and can help adapt original low resolution images into high resolution ones while retaining greater levels of details. Another application is in restoring old or damaged photos and even in satellite imagery.

Editor’s Pick: Samsung Galaxy Watch Active 2 finally gets blood pressure monitoring support

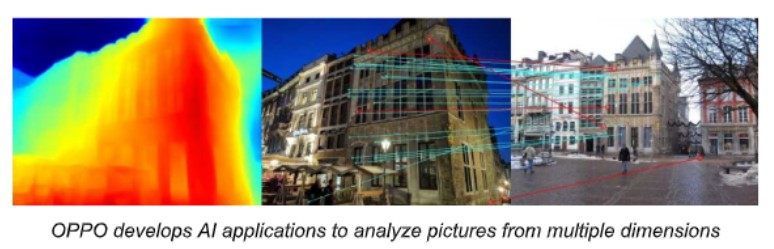

Moving forward, Oppo also received first place in the Visual Localization for Handheld Devices challenge, where it created a monocular visual localization pipeline. This utilized semantic and depth cues to pinpoint the location of any image. In the challenge, Oppo received first place for outdoor visual localization and third place for the indoor one. This technology has a varied field of application including, robotics, augmented reality, and navigation. AI’s can also potentially guide users through the camera, guiding them through advanced location tracking and navigation system.

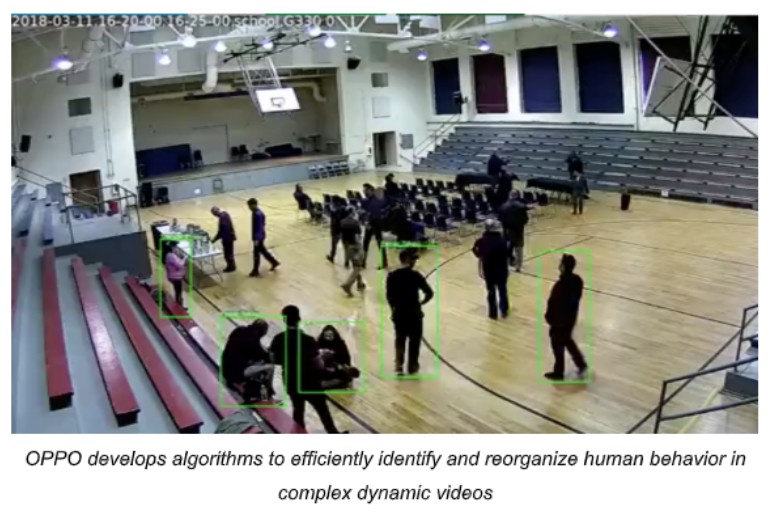

In the Activity Detection in Extended Videos challenge, Oppo came in third again. The company had to design automatic activity detection algorithms that could identify and reorganize the characters in each frame. The algorithm would have to recognize multiple human activities in complex dynamic videos. This technology could potentially play a huge role in the future of human-computer interaction and even sports analysis videos.

UP NEXT: Huawei to launch new Desktop PC with AMD 4000 series Renoir APUs

(Via)