Ant Group has launched “AntGuard,” a significant development in addressing the security concerns tied to large AI models. This solution consists of two main parts: “AntScan 2.0” and “TianGuard,” with the aim of enhancing the trustworthiness and dependability of large AI models employed across different industries.

AntGuard: Enhancing Security for Large AI Models

The primary goal of AntGuard is to tackle security issues related to problematic training data, uncontrollable inference processes, and external malicious influences. It focuses on three essential areas: measuring large model security, intelligent risk management, and cleaning up data-related issues.

AntScan 2.0 acts as a security expert for large models. Before these models are put into action, it thoroughly checks them, identifying vulnerabilities across various aspects like data security, content security, and ethical considerations.

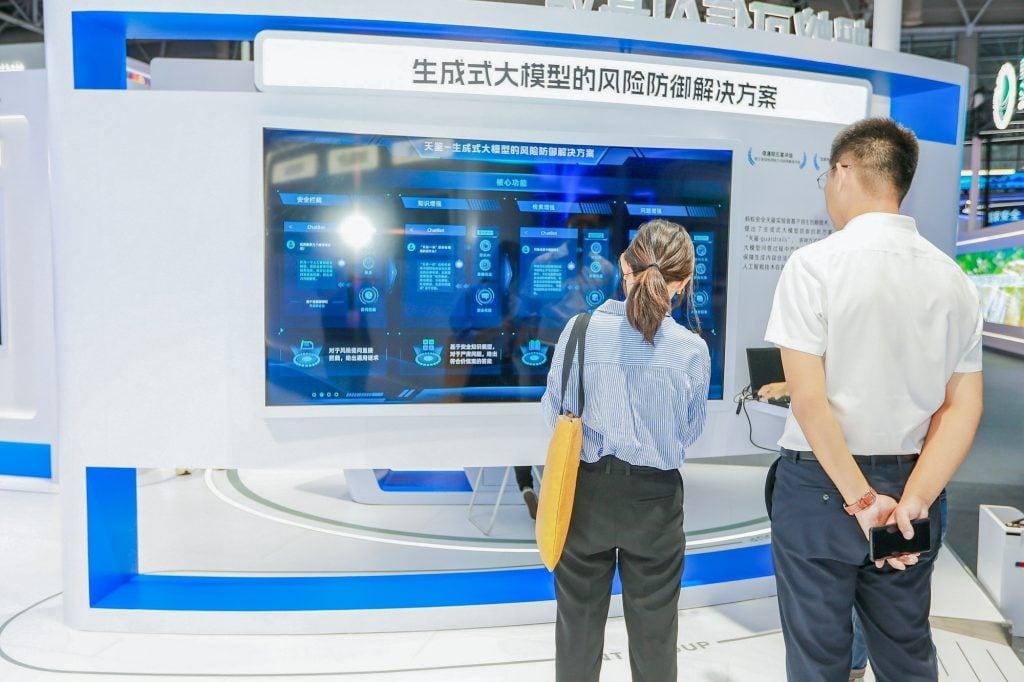

TianGuard, on the other hand, acts like a protective shield for large models. It uses smart risk control technology to protect models from harmful external queries and filters out potentially risky responses generated by these models. This approach ensures security throughout the entire process, from user inputs to the model’s outputs after deployment.

Moreover, Ant Group and TianGuard are dedicated to maintaining model security during both the training and application phases. They achieve this by implementing strategies like cleaning up problematic data sources, aligning training processes, and conducting research to improve model transparency.

Both AntScan 2.0 and TianGuard are now accessible to the public, providing a robust security framework for large-scale AI applications.

RELATED:

- OpenAI Unveils Plans for First Developer Convention this November

- Apple Enters the AI Race, Spending Millions of Dollars to Develop New Chatbots

- Baidu’s ChatGPT Rival Ernie Bot Takes Off, Sees 1 Million Users in 24 Hours

- Huawei Mate 60 Pro Plus vs Pixel 7 Pro: Specs Comparison

- Samsung Galaxy Z Fold5 vs Huawei Mate X5: Specs Comparison

(Via)