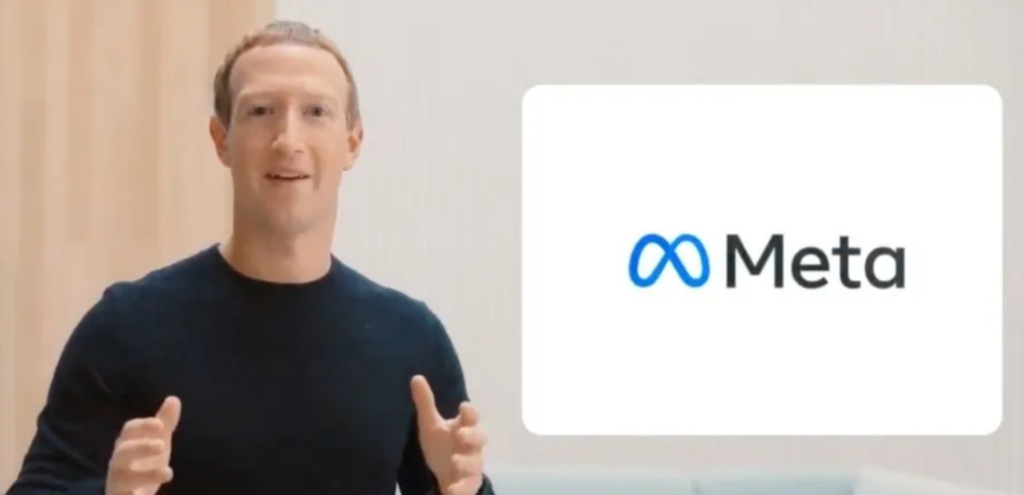

Meta Platforms, the parent company of social media giants Facebook and Instagram, has been accused of copyright infringement, leading to a consolidated lawsuit from notable authors such as Sarah Silverman and Michael Chabon. The crux of the accusation lies in Meta’s purported use of thousands of copyrighted books without proper authorization to train its artificial intelligence language model, Llama.

Despite stern warnings from Meta’s legal team about the potential legal risks associated with using pirated books for AI training, the company allegedly proceeded with the contentious dataset. The legal confusion gained further complexity as evidence from chat logs surfaced, featuring Meta-affiliated researcher Tim Dettmers discussing the procurement of the dataset in a Discord server.

According to the chat logs, Dettmers engaged in conversations with Meta’s legal department, highlighting concerns about the legality of employing book files for training data. The legal team reportedly cautioned against immediate use, citing issues related to “books with active copyrights.” Participants in the chat debated whether training on such data could be justified under the fair use doctrine, a U.S. legal principle protecting certain unlicensed uses of copyrighted works.

Originally initiated over the summer, the lawsuit was recently consolidated, combining two separate legal actions against Meta. Recent developments in the case include a California judge dismissing part of the Silverman lawsuit last month, prompting authors to seek amendments to their claims, indicating an evolving legal situation.

The implications of this legal battle extend beyond Meta, with potential repercussions throughout the AI industry. Success in these lawsuits could increase the cost of developing data-hungry AI models, as companies may face heightened scrutiny and compensation demands from content creators. Furthermore, new regulatory rules in Europe could compel AI companies, including Meta, to disclose the data used to train their models, exposing them to additional legal risks.

Meta’s Llama models, particularly the latest version, Llama 2, released in the summer, are at the center of the controversy. While the first version was trained using the “Books3 section of ThePile,” details about the training data for Llama 2, a potential disruptor in the market for generative AI software, were not disclosed by Meta.

Related:

- Meta’s new AI Image Generator Trained on Your 1.1 Billion Social Media Photos

- Meta exposes massive China-based networks on Social Media

- Ex Twitter employee accused of helping Saudi Arabia Spy

(via)