Musk’s AI startup xAI just introduced Grok-1.5 which apparently brings some significant improvement over the previous version.

An improved short-term memory

Grok-1.5 is now capable of processing 128k tokens which is 16 times higher than the previous version. It essentially enables the model to offer 16 times higher short-term memory and as a result, the ability to process substantially longer documents.

For those unfamiliar, in LLMs, tokens are the smallest units of data that can be processed. In the context of text, a token can be a word or a part of a word. Reportedly, the enhanced version of Grok can also deal with more complex prompts.

Grok-1.5 performs in coding and math-related problems

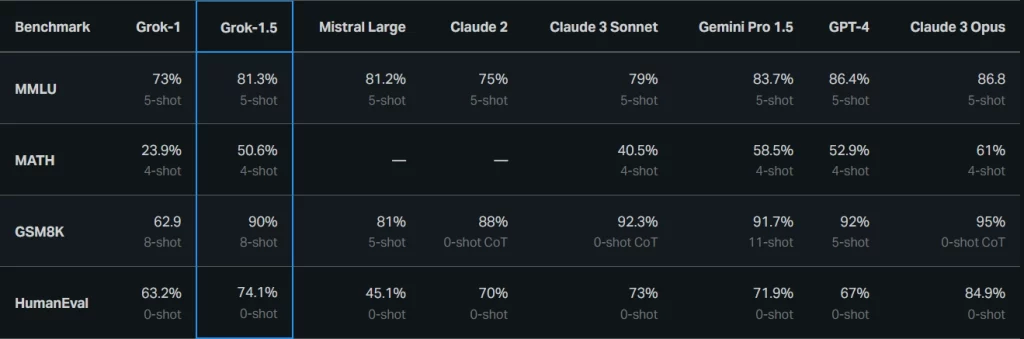

Grok-1.5 now also performs better coding and math-related tasks. To give an idea, Musk’s AI startup has shared some benchmark results they have tested with the new model.

“In our tests, Grok-1.5 achieved a 50.6% score on the MATH benchmark and a 90% score on the GSM8K benchmark, two math benchmarks covering a wide range of grade school to high school competition problems.” xAI added “Additionally, it scored 74.1% on the HumanEval benchmark, which evaluates code generation and problem-solving abilities.”

Grok-1.5 is built on a custom distributed training framework based on JAX, Rust, and Kubernetes. A custom training coordinator ensures that problematic nodes are automatically detected and removed from the training job. In short, they have managed to optimize their training process.

Grok is currently limited to X Premium+ subscribers. Speaking of the availability of Grok-1.5, the platform notes that early testers and existing Grok users or X Premium+ subscribers will get the opportunity ‘soon’. It’s expected to be gradually rolled out to a wider audience.

Related:

- Unlock Savings: Discount on Every Giztop Product under the New Year Sale

- Big Discount: AOOSTAR R1 N100 NAS Mini PC Only For $159

- Get latest Oneplus 12 Phone for $699 on Geekwills

- Get $100 Off on Vivo X100 Pro at Giztop

- Get the Realme GT5 Pro phone on Giztop for $599