TikTok has now joined Twitter, Facebook, and a host of other social media platforms to put protocols in place to tackle the menace of fake or misleading content being propagated on its platform.

TikTok has now unveiled a new early warning system that will warn users when they are about to share what it termed “unsubstantiated content”. These prompts will appear on video content that TikTok’s partnering web-based fact-checking platforms PolitiFact, Lead Stories, and SciVerify are unable to confirm. TikTok says this feature will be particularly useful during unfolding events before fact-checkers pronounce their final opinion on the content.

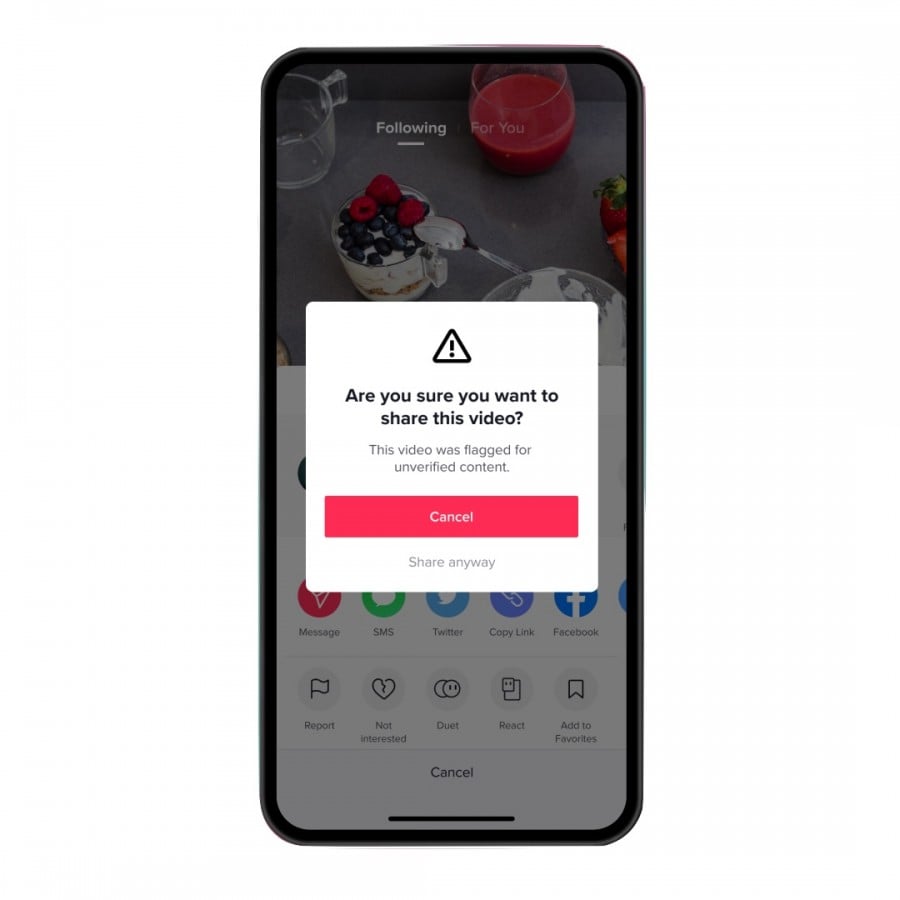

Whenever a user tries to re-share a video with unsubstantiated content, a grey caution prompt will show on top of the screen. Although the user will still be able to share the video, he would have been notified about being a potential broadcaster of misleading content. TikTok explains that the goal is to have you think twice before yielding yourself as an unverified content broadcaster before you take the action. The originator of the unverified video content will also get a warning from TikTok if his content is flagged; the videos will not be listed on the “For You” feed which is TikTok’s landing page.

If you as a viewer attempt to share an already flagged video, you will get a prompt reminding you that the video has been flagged as unverified content. This additional layer of caution is aimed at assisting you to consider deeply whether it is worth it to share.

Based on beta testing of the new warning feature, TikTok says users on its platform shared 24% less misleading content while likes on flagged videos reduced by 7%. The new feature is already active for TikTok users in the US and Canada and will ultimately roll out to other parts of the world.

RELATED;