OpenAI announced yesterday on March 14th, 2023 that it has successfully created GPT-4 – a large multimodal artificial intelligence (accepting image and text inputs, emitting text outputs) that exhibits outstanding human-level performance on various professional and academic benchmarks and examinations worldwide.

GPT-4 is the successor to GPT 3.5, the large AI language model that powers ChatGPT.

As per the research whitepaper published by OpenAI, GPT-4 can pass a simulated Bar Exam with a score around the top 10% of test takers; in contrast, GPT-3.5’s score was around the bottom 10%. The bar exam is a mandatory test that some people have to take if they want to be lawyers in their respective countries. Lawyers are people who help other people with legal problems, like making contracts, suing someone, or defending someone in court. The bar exam is different in each state and country, but it usually has two parts: one part with multiple-choice questions and one part with essays. The questions and essays are about different areas of law, like contracts, criminal law, family law, and evidence. The bar exam is very hard and long; it takes two days to finish it. People who take the bar exam have to study a lot before they can pass it. If they pass the bar exam, they can practice law in that state or country (i.e. be a lawyer).

GPT-4 does not only ace professional lawyers’ exams but university entrance (pre-university) exams as well, including but not limited to the AP exams, and the SAT exams. Pre-university exams such as the SAT, AP, GCE A Levels, Diploma and the International Baccalaureate IB Diploma, are used by universities across the world to evaluate whether an individual can be admitted into the university’s bachelor’s degree programme.

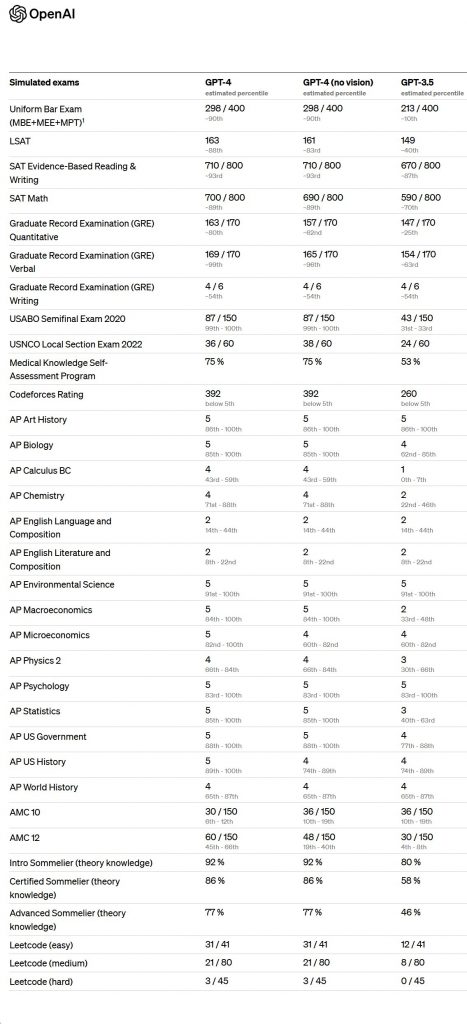

To understand the difference between the two models(GPT 3.5 and GPT 4), OpenAI has tested GPT-4 on a variety of benchmarks, including simulating exams that were originally designed for humans. OpenAI has stated that GPT-4 is taking the most recent publicly-available tests (in the case of the Olympiads and AP free response questions) or 2022–2023 editions of practice exams purchased by OpenAI. It should be noted that the exams tested by OpenAI are mostly US-centric, such as the SAT and AP exams, global equivalents to both of the exams include the GCE Cambridge A Levels or an equivalent diploma.

The exam results of GPT-4 are stated in the table below.

Highlights

The main takeaways from these results are as follows:

- GPT-4 has finished and aced the Uniform Bar Exam (MBE+MEE+MPT) in the top 10% of all scorers with a score of 298/400, compared to the bottom 10% by GPT 3.5.

- For the SATs, GPT-4 placed in the top 12% of the LSAT (law school admission test), top 7% in SAT Evidence-Based Reading & Writing and top 11% in SAT Math

- In the AP exams, GPT-4 is placed within the top 15% in AP Biology, the top 30% in AP Chemistry, the top 34% in AP Physics 2, the top 15% in AP Statistics, the top 18% in AP Macroeconomics, the top 17% in AP Psychology, the top 14% in AP Art History and the top 10% in Environmental Science.

- GPT-4 is placed within the top 1% of scorers in the USA Biology Olympiad semi-final competition. The USA Biolympiad (USABO) is a national competition for high school students in the United States who are interested in biology. It consists of three rounds: an open exam, a semifinal exam, and a national finals. The top four students from the national finals represent the USA at the International Biology Olympiad (IBO), a worldwide competition involving student teams from over seventy countries.

Availability of GPT-4

OpenAI is releasing GPT-4’s text input capability via ChatGPT and the API (with a waitlist) from today onwards. To prepare the image input capability for wider availability, OpenAI is collaborating closely with a single partner to start. OpenAI is also open-sourcing OpenAI Evals, our framework for automated evaluation of AI model performance, to allow anyone to report shortcomings in our models to help guide further improvements.

RELATED:

- OpenAI Launches New GPT 3.5 Turbo and Whisper AI Models, 10x Cheaper With Better Results

- Microsoft Bing Has Hit the 100 Million Daily Active Users Milestone

- Microsoft Loosens Bing ChatGPT Limit to 8 Turns Per Conversation, Alongside New Turn Counter Feature

- OnePlus Ace 2V is available for Pre-Order on Giztop

(Source)