Researchers at Cornell University have invented an earphone that can continuously track full facial expressions, even when the face is covered by a mask. The ear-mounted device, named C-Face, works by observing the contour of the cheeks and then translates the expressions into emojis or speech commands.

Using the C-Face device, users can express emotions to online collaborators without holding cameras in front of their faces. This seems like a useful tool for communication, especially at a time when the world is engaged in remote work or learning because of the ongoing COVID-19 pandemic.

Cheng Zhang, Assistant Professor of Information Science and Senior Author of this research has said that “this device is simpler, less obtrusive and more capable than any existing ear-mounted wearable technologies for tracking facial expressions.”

As the device works by detecting muscle movement, C-Face can capture facial expressions even when users are wearing masks. It can also be used to direct a computer system, such as a music player, just by using only facial cues.

EDITOR’S PICK: A Samsung Display design patent reveals a third possible foldable form factor for smartphones

Using the device, avatars in virtual reality environments can express how their users are actually feeling, and instructors can also get valuable information about student engagement during online lessons.

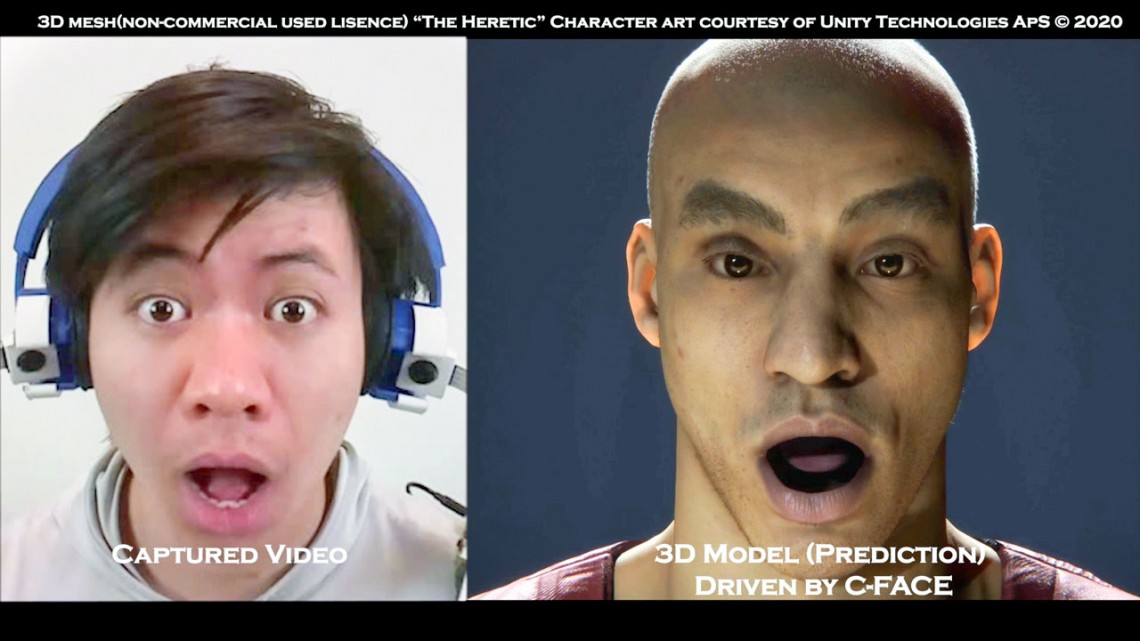

As for its working, once the images are captured, they are reconstructed using computer vision and a deep learning model. Then, a convolutional neural network – an AI model for classifying, detecting, and retrieving images, reconstructs the contours into expressions.

It translates the images of cheeks to 42 facial feature points, or landmarks, which represents the shapes and positions of the mouth, eyes, and eyebrows since those features are the most affected by changes in expression.

The researchers tested the C-Face device on nine participants and the average error of the reconstructed landmarks was under 0.8 mm. They also found that emoji recognition was more than 88 percent accurate and silent speech was nearly 85 percent accurate.

UP NEXT: Huawei plans on creating more jobs and raising investments in foreign markets