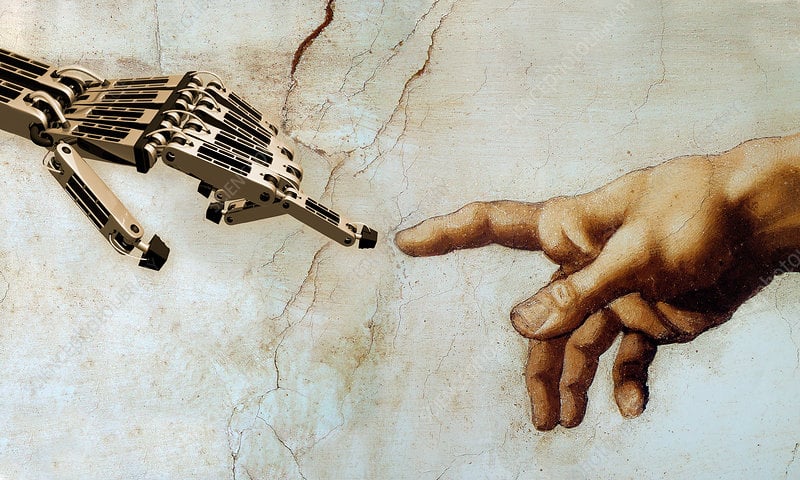

OpenAI, an AI research and deployment company financially backed by Microsoft, has published a blog post urging caution and foresight for the development of artificial general intelligence (AGI), which is defined as AI that can perform any intellectual task that humans can.

The company, which was originally founded by a group of prominent tech entrepreneurs and researchers, including Elon Musk and Sam Altman, stated that AGI could have profound implications for humanity, both positive and negative. The blog post outlined some of the potential benefits of AGI, such as solving global challenges like climate change and poverty, as well as some of the potential risks, such as misalignment with human values and goals, malicious use by bad actors, and existential threats to human civilization.

OpenAI argued that achieving AGI would require careful planning and coordination among various stakeholders, including researchers, policymakers, industry leaders, civil society groups, and the general public. The company also called for more research on how to ensure that AGI is aligned with human values and can be controlled by humans. Additionally, OpenAI advocated for more transparency and accountability in AI development and deployment.

The organisation hopes for a global conversation about 3 key questions: how to govern these AI systems, how to fairly distribute the benefits they generate, and how to fairly share access.

The blog post was published as part of OpenAI’s mission to ensure that artificial intelligence benefits all of humanity. The company operates both a non-profit arm (OpenAI Inc.) and a for-profit arm (OpenAI LP) that share this vision. OpenAI is known for developing some of the most advanced AI systems in the world, such as GPT-4 (a natural language processing system), DALL-E (a generative image synthesis system), and its most recent viral hit, ChatGPT (a chatbot that is trained on a large language model based on information available on the Internet).

RELATED:

- Microsoft Updates Bing’s Friendliness and It Clearly Explains That It Is Not Sentient and Feel No Emotions

- Microsoft Increases Daily Bing ChatGPT Limit to 100, and More QoL updates

- Oppo Find N2 Flip Offers 4 Years of Android Updates, Rivaling Samsung

- Get $100 OFF on Oppo Find N2 Foldable Phone (Coupon)

(Source)