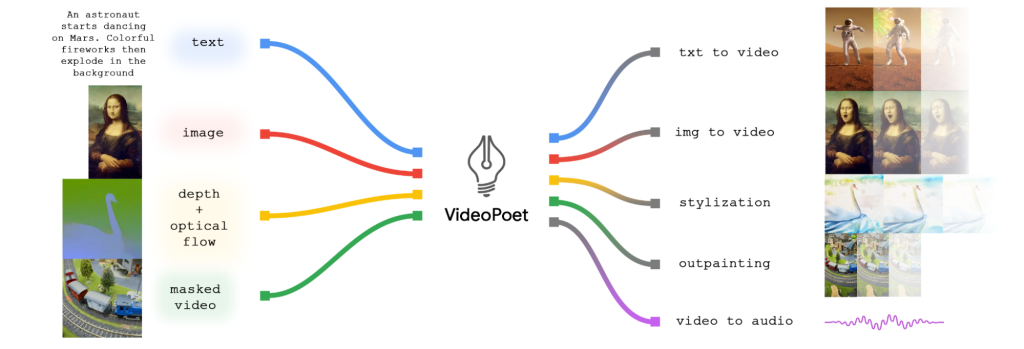

After Microsoft‘s Copilot AI gets the ability to generate audio clips from text prompts, Google has introduced VideoPoet, a large language model (LLM) that pushes the boundaries in video generation with 10-second clips that produce fewer artifacts. The model supports an array of video generation tasks, including text-to-video conversion, image-to-video transformation, video stylization, inpainting, and video-to-audio functionalities.

It generates 10-sec video clips from text prompts and is also able to animate still images

Unlike its predecessors, VideoPoet sets itself apart by excelling in the generation of coherent large-motion videos. The model showcases its prowess by producing ten-second long videos, leaving its competition, including Gen-2 behind. Notably, VideoPoet doesn’t rely on specific data for video generation, distinguishing it from other models that require detailed input for optimal results.

This multifaceted capability is made possible by leveraging a multi-modal large model, setting it on a trajectory to potentially become the mainstream in video generation.

Google’s VideoPOET takes a departure from the prevailing trend in video generation models, which predominantly rely on diffusion-based approaches. Instead, VideoPoet harnesses the power of large language models (LLMs). The model seamlessly integrates various video generation tasks within a single LLM, eliminating the need for separately trained components for each function.

The resulting videos exhibit variable length and diverse actions and styles based on the input text content. Additionally, VideoPoet can perform the conversion of input images into animations based on provided prompts, showcasing its adaptability across different inputs.

The release of VideoPOET adds a new dimension to AI-driven video generation, hinting at the possibilities that lie ahead in 2024.

Related:

- Xiaomi 13 Ultra Premium Camera Phone is now only $799

- Alldocube iWork GT 12: AMD 2-in-1 laptop, $100 off and free keyboard

- Get $100 OFF on Xiaomi 14 Pro at Giztop (1TB Variant)

- Best Apple Watch Cases in 2023: Spigen, Otterbox, Casetify & More

- Best Feature Phones with UPI support 2023: Nokia Dominates

(Source)